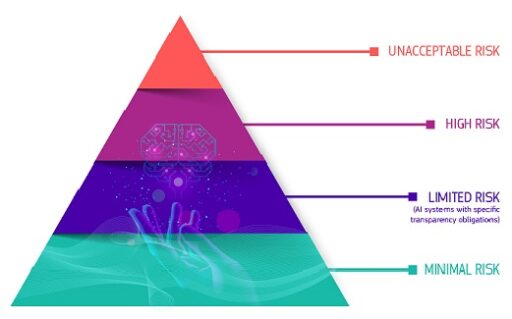

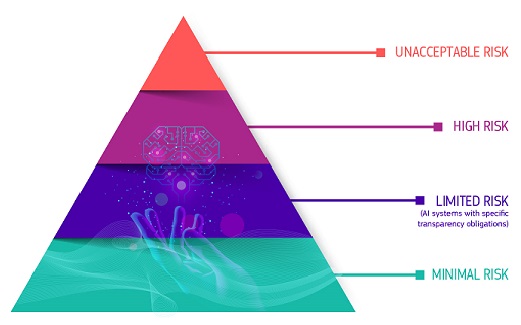

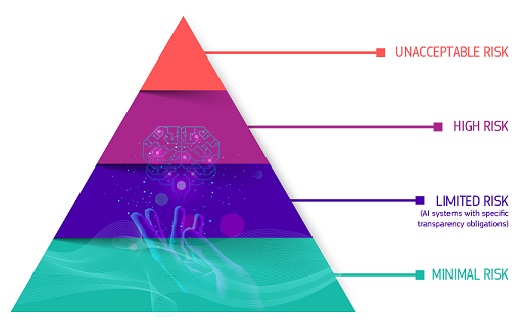

A Risk-based Approach

The AI Act defines 4 levels of risk for AI systems:

Unacceptable risk

All AI systems considered a clear threat to the safety, livelihoods and rights of people are banned. The AI Act prohibits eight practices, namely:

- harmful AI-based manipulation and deception

- harmful AI-based exploitation of vulnerabilities

- social scoring

- Individual criminal offence risk assessment or prediction

- untargeted scraping of the internet or CCTV material to create or expand facial recognition databases

- emotion recognition in workplaces and education institutions

- biometric categorisation to deduce certain protected characteristics

- real-time remote biometric identification for law enforcement purposes in publicly accessible spaces

The prohibitions became effective in February 2025. The Commission published two key documents to support the practical application of the prohibited practices:

High risk

AI use cases that can pose serious risks to health, safety or fundamental rights are classified as high-risk. These high-risk use-cases include:

- AI safety components in critical infrastructures (e.g. transport), the failure of which could put the life and health of citizens at risk

- AI solutions used in education institutions, that may determine the access to education and course of someone’s professional life (e.g. scoring of exams)

- AI-based safety components of products (e.g. AI application in robot-assisted surgery)

- AI tools for employment, management of workers and access to self-employment (e.g. CV-sorting software for recruitment)

- Certain AI use-cases utilised to give access to essential private and public services (e.g. credit scoring denying citizens opportunity to obtain a loan)

- AI systems used for remote biometric identification, emotion recognition and biometric categorisation (e.g. AI system to retroactively identify a shoplifter)

- AI use-cases in law enforcement that may interfere with people’s fundamental rights (e.g. evaluation of the reliability of evidence)

- AI use-cases in migration, asylum and border control management (e.g. automated examination of visa applications)

- AI solutions used in the administration of justice and democratic processes (e.g. AI solutions to prepare court rulings)

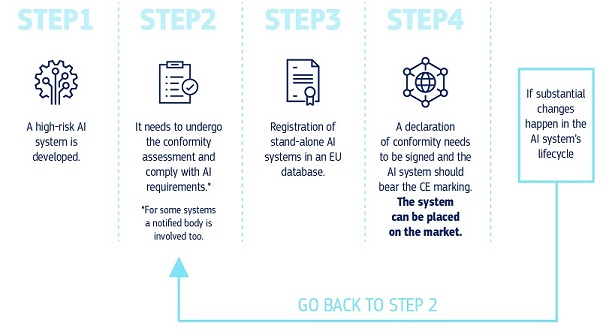

High-risk AI systems are subject to strict obligations before they can be put on the market:

- adequate risk assessment and mitigation systems

- high-quality of the datasets feeding the system to minimise risks of discriminatory outcomes

- logging of activity to ensure traceability of results

- detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance

- clear and adequate information to the deployer

- appropriate human oversight measures

- high level of robustness, cybersecurity and accuracy

The rules for high-risk AI will come into effect in August 2026 and August 2027.

Transparency risk

This refers to the risks associated with a need for transparency around the use of AI. The AI Act introduces specific disclosure obligations to ensure that humans are informed when necessary to preserve trust. For instance, when using AI systems such as chatbots, humans should be made aware that they are interacting with a machine so they can take an informed decision.

Moreover, providers of generative AI have to ensure that AI-generated content is identifiable. On top of that, certain AI-generated content should be clearly and visibly labelled, namely deep fakes and text published with the purpose to inform the public on matters of public interest.

The transparency rules of the AI Act will come into effect in August 2026.

Minimal or no risk

The AI Act does not introduce rules for AI that is deemed minimal or no risk. The vast majority of AI systems currently used in the EU fall into this category. This includes applications such as AI-enabled video games or spam filters.

How does it all work in practice for providers of high-risk AI systems?

Once an AI system is on the market, authorities are in charge of market surveillance, deployers ensure human oversight and monitoring, and providers have a post-market monitoring system in place. Providers and deployers will also report serious incidents and malfunctioning.

What are the rules for General-Purpose AI models?

General-purpose AI (GPAI) models can perform a wide range of tasks and are becoming the basis for many AI systems in the EU. Some of these models could carry systemic risks if they are very capable or widely used. To ensure safe and trustworthy AI, the AI Act puts in place rules for providers of such models. This includes transparency and copyright-related rules. For models that may carry systemic risks, providers should assess and mitigate these risks. The AI Act rules on GPAI became effective in August 2025.

Supporting compliance

In July 2025, the Commission published 3 key instruments to support the responsible development and deployment of GPAI models:

- The Guidelines on the scope of the obligations for providers of GPAI models clarify the scope of the GPAI obligations under the AI Act, helping actors along the AI value chain understand who must comply with these obligations.

- The GPAI Code of Practice is a voluntary compliance tool submitted to the Commission by independent experts, which offers practical guidance to help providers comply with their obligations under the AI Act related to transparency, copyright, and safety & security.

- The Template for the public summary of training content of GPAI models requires providers to give an overview of the data used to train their models. This includes the sources from which the data was obtained (comprising large datasets and top domain names).The template also requests information about data processing aspects to enable parties with legitimate interests to exercise their rights under EU law.

These tools are designed to work hand-in-hand. Together, they provide a clear and actionable framework for providers of GPAI models to comply with the AI Act, reducing administrative burden, and fostering innovation while safeguarding fundamental rights and public trust.

The Commission is also developing support other tools that offer guidance on how to comply with the AI Act’s transparency rules:

- The Code of Practice on marking and labelling of AI-generated content selected by the AI Office. The code will be a voluntary tool to guide providers and deployers of generative AI systems to comply with transparency obligations. These include marking AI generated content and disclosing the artificial nature of images, and audio (including deepfakes) as well as text.

- The Guidelines on transparent AI systems to clarify the scope of application, relevant legal definitions, the transparency obligations, the exceptions and related horizontal issues.

These support instruments are under preparation and will be published in the second quarter of 2026.

Governance and implementation

The European AI Office and authorities of the Member States are responsible for implementing, supervising and enforcing the AI Act. The AI Board, the Scientific Panel and the Advisory Forum steer and advise the AI Act’s governance. Find out more details about the Governance and enforcement of the AI Act.

Application timeline

The AI Act entered into force on 1 August 2024, and will be fully applicable 2 years later on 2 August 2026, with some exceptions:

- prohibited AI practices and AI literacy obligations entered into application from 2 February 2025

- the governance rules and the obligations for GPAI models became applicable on 2 August 2025

- the rules for high-risk AI systems – embedded into regulated products – have an extended transition period until 2 August 2027

What is the Commission’s proposal to simplify the AI Act implementation?

The Digital Package on Simplification proposes amendments to simplify the AI Act implementation and ensure the rules remain clear, simple, and innovation-friendly.

The Commission proposes to adjust the timeline for the application of high-risk rules to a maximum of 16 months. This ensures the rules apply when companies have the right support tools to facilitate implementation, such as standards.

The Commission is also proposing targeted amendments to the AI Act that will:

- Reinforce the AI Office’s powers and centralise oversight of AI systems built on general-purpose AI models, reducing governance fragmentation;

- Extend certain simplifications that are granted to SMEs and SMCs, including simplified technical documentation requirements;

- Require the Commission and Member States to promote AI literacy and ensure continuous support to companies by building on existing efforts (such as the AI Office’s repository of AI literacy practices, recently revamped), while keeping training obligations for high-risk deployers in place;

- Broaden measures in support of compliance so more innovators can benefit from regulatory sandboxes that will be set up as from 2028 and by extending the possibilities for real world testing;

- Adjust the AI Act’s procedures to clarify its interplay with other laws and improve its overall implementation and operation.

All this complements actions which the Commission and its AI Office are already taking to provide clarity for businesses and national authorities, for instance, through guidelines, codes of practice, and the AI Act Service Desk.

The legislative proposal has been adopted on 19 November. The European Parliament and the Council of the EU are now discussing and negotiating the Digital Omnibus on AI.