Google is significantly expanding its suite of personal safety tools, giving users far more granular control over how their private information surfaces across the internet. The upgrades, announced in February 2026, represent the search giant’s most aggressive push yet to help individuals scrub sensitive data from search results — a move that arrives amid growing consumer anxiety over data exposure, identity theft, and the proliferation of AI-powered scraping tools that can harvest personal details at scale.

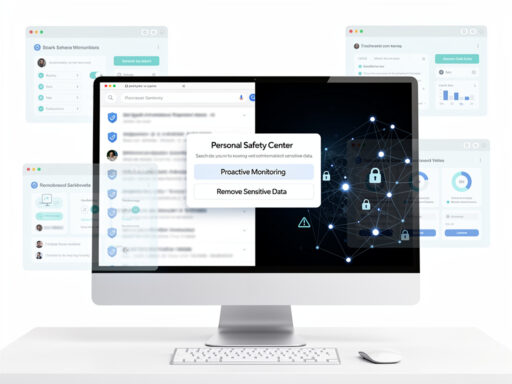

The enhancements build on Google’s “Results About You” feature, first introduced in 2023, which allowed users to request the removal of search results containing their personal information. Now, the tool has been upgraded to detect and flag a broader range of sensitive data types, automate more of the removal process, and proactively notify users when new instances of their personal information appear in search results. For privacy-conscious consumers and the cybersecurity professionals who advise them, the changes signal a meaningful shift in how the world’s dominant search engine approaches individual data protection.

A Broader Net for Personal Information Detection

As reported by Ars Technica, the upgraded safety tools now cover an expanded set of personal data categories. Previously, the “Results About You” dashboard primarily targeted phone numbers, home addresses, and email addresses that appeared in search results. The new version extends detection to include financial identifiers such as bank account numbers, government-issued ID numbers beyond Social Security numbers, medical record identifiers, and login credentials that may have been exposed in data breaches.

Google has also improved the tool’s ability to identify personal information that appears in less obvious contexts — embedded in PDFs, cached pages, image metadata, and data broker listings that previously evaded the system’s detection algorithms. The company says it has deployed updated machine learning models trained to recognize personal data patterns across a wider variety of document formats and web page structures. This is a notable technical achievement, given the sheer diversity of ways personal information can be published, scraped, and redistributed across the web.

Proactive Alerts and Automated Removal Requests

Perhaps the most consequential upgrade is the shift from a reactive to a proactive model. In earlier iterations, users had to manually search for their own information and submit individual removal requests. The new system continuously monitors Google’s index for new appearances of a user’s registered personal data and sends alerts when matches are found. Users can then approve removal requests with a single tap, or configure the system to automatically submit requests for certain data categories without requiring manual approval each time.

This automation addresses one of the most persistent criticisms of the original tool: that it placed an unreasonable burden on users to police their own data across an essentially infinite web. Privacy advocates had long argued that expecting individuals to continuously monitor search results for their personal information was impractical, particularly for people who had been victims of doxxing, domestic abuse, or large-scale data breaches. Google’s move toward continuous, automated monitoring represents a concession to those concerns, though the company is careful to note that removal from Google’s search index does not delete the underlying content from the web itself.

The Data Broker Problem and Google’s Expanding Role

A significant portion of the personal information that surfaces in Google search results originates from data broker websites — companies that aggregate and sell consumer data harvested from public records, commercial transactions, and online activity. Google’s upgraded tools now include enhanced capabilities for identifying and targeting data broker listings specifically. According to Ars Technica, the system can now recognize common data broker page templates and prioritize removal requests for these sources, which tend to be among the most prolific publishers of personal information.

This is a strategically important development. The data brokerage industry has grown into a multi-billion-dollar sector, and regulatory efforts to constrain it have been slow and uneven. While states like California, Vermont, and Texas have enacted data broker registration laws, there is no comprehensive federal statute governing the industry. Google’s willingness to more aggressively de-index data broker content could have an outsized practical impact, since for most consumers, information that doesn’t appear in Google search results effectively doesn’t exist in any accessible sense. Critics, however, note that this gives Google enormous unilateral power over what information is discoverable — a tension that has shadowed the company’s content moderation decisions for years.

Dark Web Monitoring Enters the Mainstream

In a parallel expansion, Google has also enhanced the dark web monitoring capabilities bundled into Google One subscriptions. The dark web report feature, which scans known breach databases and underground marketplaces for a user’s personal information, now covers additional data types and provides more actionable guidance when exposures are detected. Users receive step-by-step remediation recommendations, including prompts to change compromised passwords, enable two-factor authentication on affected accounts, and freeze credit reports when financial data is involved.

The integration of dark web monitoring with the search result removal tools creates what Google describes as a more holistic safety ecosystem. A user who discovers via the dark web report that their email and password were exposed in a breach can simultaneously check whether that information has surfaced in search results and initiate removal if it has. This kind of cross-functional integration has previously been available only through paid third-party services like Aura, Norton LifeLock, and DeleteMe, which charge annual subscription fees ranging from $100 to $250. Google’s inclusion of comparable features in its free tier and modestly priced Google One plans could put significant competitive pressure on those services.

Privacy as Product Strategy

Google’s investment in personal safety tools should be understood not only as a consumer protection initiative but also as a strategic business decision. The company faces intensifying regulatory scrutiny worldwide, including ongoing antitrust proceedings in the United States and the European Union’s enforcement of the Digital Services Act and General Data Protection Regulation. Demonstrating a proactive commitment to user privacy helps Google manage regulatory risk and maintain consumer trust at a time when both are under strain.

There is also a competitive dimension. Apple has made privacy a central pillar of its brand identity, and smaller search engines like DuckDuckGo and Brave have built their market positions explicitly around data protection. By expanding its own privacy tools, Google blunts the argument that using its products requires sacrificing personal data security. The company can point to features like “Results About You” and dark web monitoring as evidence that it takes user privacy seriously, even as its core advertising business continues to depend on the collection and analysis of user data — a contradiction that privacy advocates are quick to highlight.

What the Upgrades Don’t Address

For all the improvements, significant limitations remain. Google’s removal tools only affect what appears in Google Search results. They do not remove content from the source websites, other search engines, social media platforms, or cached versions stored by third-party archival services. A determined adversary — whether a stalker, a scammer, or simply a curious neighbor — can still find personal information through alternative channels. Google acknowledges this limitation in its support documentation but argues that removing information from the world’s most-used search engine meaningfully reduces the practical risk of exposure.

Additionally, the tools require users to opt in and provide the personal information they want monitored, which creates its own privacy paradox: to protect your data, you must first give it to Google. The company says this information is stored securely and used only for the purpose of monitoring and removal, but for users already skeptical of Google’s data practices, this may be a difficult proposition to accept. The tension between Google as data collector and Google as data protector remains unresolved, and likely will for as long as the company’s business model depends on both roles.

The Broader Implications for Digital Privacy

Google’s expanded safety tools arrive at a moment when the economics of personal data are shifting rapidly. The rise of generative AI has made personal information more valuable and more vulnerable than ever. AI models can be trained on scraped web data that includes personal details, and AI-powered social engineering attacks can exploit exposed information with unprecedented sophistication. The tools Google is deploying are, in some respects, a defensive response to threats that the broader technology industry — Google included — has helped create.

For industry observers, the key question is whether these tools represent a genuine inflection point or merely incremental improvement. The shift toward proactive monitoring and automated removal is meaningful, but the underlying structural problems — a largely unregulated data brokerage industry, inconsistent privacy laws, and a web architecture that makes information nearly impossible to permanently delete — remain firmly in place. Google’s tools can mitigate the symptoms, but the disease requires policy interventions that no single company, however dominant, can deliver on its own.

Still, for the millions of users who will discover through these tools that their phone number, home address, or financial information is freely available in search results, the upgrades offer something tangible: a mechanism, however imperfect, for clawing back a measure of control over their digital lives. In an era when personal data is currency, that is no small thing.